“If AI can recognize disease progression early, then treatments and outcomes will improve.”

Isn’t it fascinating how little we understand about the brain?. A really good case for applying deep learning AI to recognize subtle patterns and changes to neuron activity can help in early diagnosis of Alzheimers disease. Using Positron Emission Tomography (PET) scans researchers are able to measure the amount of glucose a brain cell consumes.

A healthy brain cell consumes glucose to function, the more active a cell is the more glucose it consumes but as the cell deteriorates with disease, the amount of glucose it uses drops and eventually goes to zero. If doctors can diagnose the patterns of drop in glucose consumption levels sooner, they can administer drugs to help patients recover these cells which otherwise would die and cause Alzheimers.

“One of the difficulties with Alzheimer’s disease is that by the time all the clinical symptoms manifest and we can make a definitive diagnosis, too many neurons have died, making it essentially irreversible.”

JAE HO SOHN, MD, MS

Human radiologists are really good at detecting a focal point tumor but subtle global changes over time are harder to spot by the naked eye. AI is good at analyzing time series data and identifying micro patterns.

Other areas of research where AI is being applied to improve diagnosis is in osteoporosis detection and progression through bone imaging and comparison of subtle changes in the time series of images.

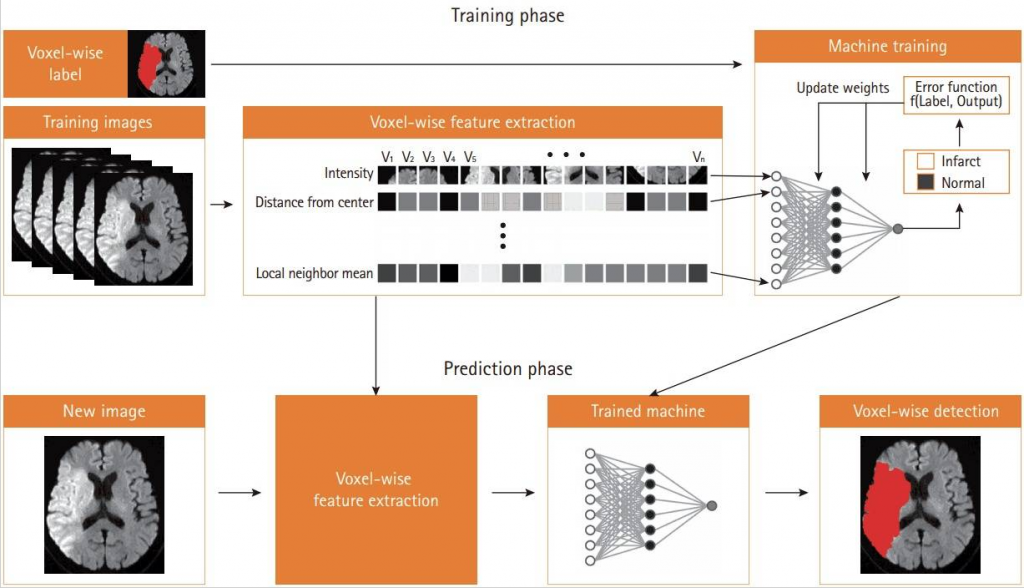

Stroke management is another area where machine learning has started to assist radiologists and neurologists. For example, here is a picture of how computers are trained with stroke imaging and then that model is used to predict if a “new image” has infarctions or not (it’s an yes or no answer).

Furthermore the ML model can identify the exact location of stroke and highlight it for the physicians, saving precious time and helping expedite treatment, in stroke treatment seconds shaved off can mean the difference between life and death.

The areas in which deep learning can be useful in Radiology are lesion or disease detection, classification, quantification, and segmentation.

“Deep learning is a class of machine learning methods that are gaining success and attracting interest in many domains, including computer vision, speech recognition, natural language processing, and playing games. Deep learning methods produce a mapping from raw inputs to desired outputs (eg, image classes)”. __RSNA

Convolutional Neural Networks (CNN) algorithms have become popular in identifying patterns in data automatically without any external engineering, especially in image processing. CNNs are developed on the basis of biological neuron structures. Here is an example of how biological neurons detect edges through visual stimuli i.e. seeing.

and here is how a similar structure can be developed using CNNs

CNN representation of biological neurons

The “deep” term in deep learning comes from the fact that there are multiple layers between inputs and outputs as represented in a simplified diagram below

If we apply the above CNNs structure to radiology images as inputs to detect disease or segment the image we can have an output that might highlight the areas where there is possible disease and/or output that says what the image might represent

“Many software frameworks are now available for constructing and training multilayer neural networks (including convolutional networks). Frameworks such as Theano, Torch, TensorFlow, CNTK, Caffe, and Keras implement efficient low-level functions from which developers can describe neural network architectures with very few lines of code, allowing them to focus on higher-level architectural issues (36–40).”

“Compared with traditional computer vision and machine learning algorithms, deep learning algorithms are data hungry. One of the main challenges faced by the community is the scarcity of labeled medical imaging datasets. While millions of natural images can be tagged using crowd-sourcing (27), acquiring accurately labeled medical images is complex and expensive. Further, assembling balanced and representative training datasets can be daunting given the wide spectrum of pathologic conditions encountered in clinical practice.”

“The creation of these large databases of labeled medical images and many associated challenges (54) will be fundamental to foster future research in deep learning applied to medical images.” __RSNA

300 applications have been identified for deep learning in radiology, check the survey out here

Sources:

- https://pubs.rsna.org/doi/10.1148/rg.2017170077

- https://www.ucsf.edu/news/2019/01/412946/artificial-intelligence-can-detect-alzheimers-disease-brain-scans-six-years

- https://www.rheumatoidarthritis.org/ra/diagnosis/imaging/

- https://pubs.rsna.org/doi/10.1148/radiol.2019181568

- https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5647643/

- https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5789692/