“To drive better than humans, autonomous vehicles must first see better than humans.” __Nvidia

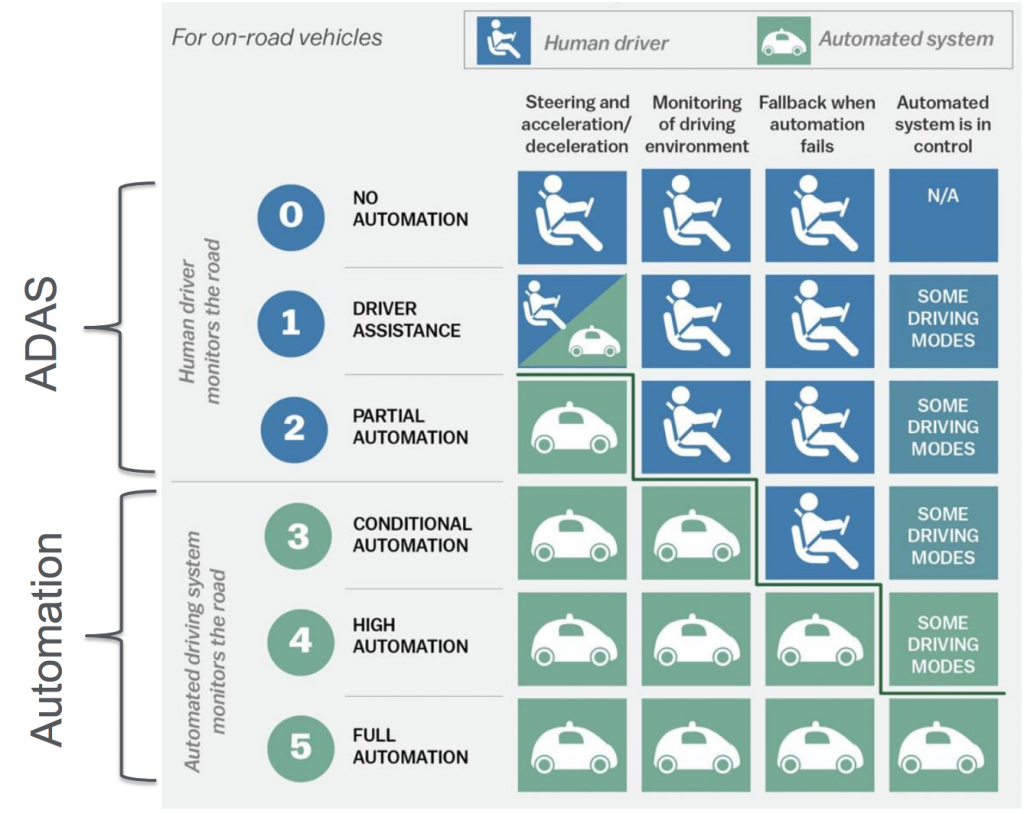

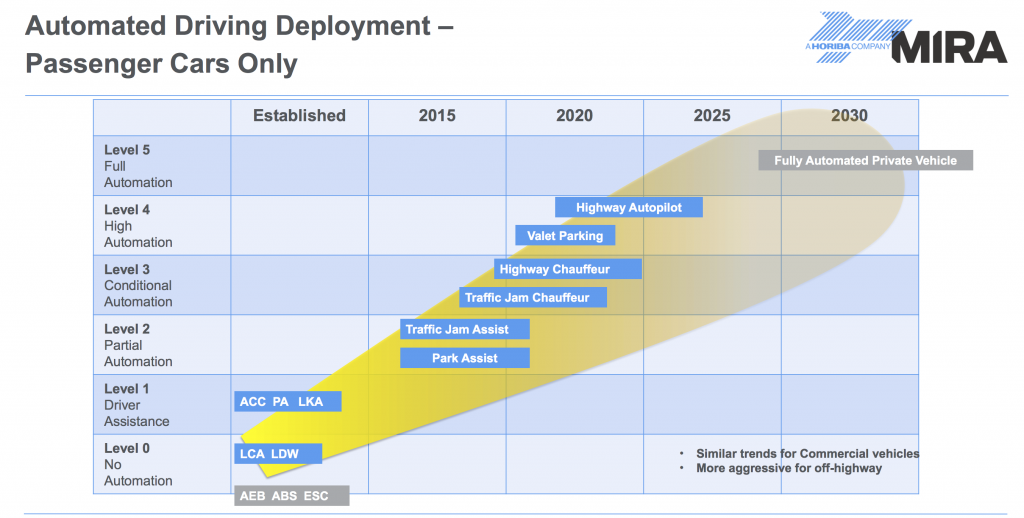

How does the technology we briefly reviewed in part 1 of this blog actually work to make self-driving possible? before jumping into how the tech comes together, we should understand the leveling up to become fully autonomous –

Levels of autonomy

Different cars are capable of different levels of self-driving, and are often described by researchers on a scale of 0-5.

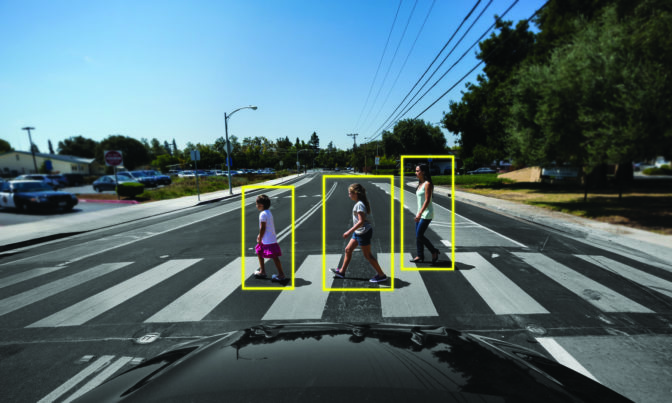

What are the challenges and how are they being addressed with AI and Tech. For example, the car needs to be able to “see” what is around it, how fast is it moving, how far is it and do it extremely well in terrible weather or poor lighting conditions, not just in good light. For example, here is a picture of the rearview wide angle camera that shows 3 objects moving, so first, the software on the car needs to see them, then figure out how near to the car they are, while also detecting if they are moving or stationary.

Car uses radar to detect the distance of the objects, radar works by sending out pulses of radio waves and receiving them back as they bounce off the surface of these objects. Using the time it took for them to bounce back and speed at which they travel, one can calculate the distance (S = D/T). Good thing about radar is that it can work in low or zero light conditions. A radar can tell distances and speeds but it cannot tell what the objects are, whether they are humans, animals, vehicles, lamp posts etc. Lidars are being used (360 degree light detection and radiating devices) to get a sense of what the object might be.

There is little research going on in terms of sound and smell. First, focus has been on sight (being able to “see”). Imagine if cars can sense sounds and smells in and around them, wouldn’t that be interesting? I digress….

So, the way cars see is by using the sensors (radars, lidars, cameras). These sensors capture images, distances, 3D mappings of surroundings and that data is fed into the CPU either in the car or the cloud. Software (image recognition, data processing and decision logic) interprets the data and sends commands to the accelerator, brake, steering controls to make decision on navigation, to slow down, brake, turn or speed up.

Sources:

- https://selfdrivingcars.mit.edu

- https://blogs.nvidia.com/blog/2019/04/15/how-does-a-self-driving-car-see/

- https://cs.stanford.edu/people/karpathy/convnetjs/

- https://www.ucsusa.org/clean-vehicles/how-self-driving-cars-work